Go to Rust: Why Discord is Making the Switch

In this blog, we explore the reasons behind Discord’s switch from the Go programming language to Rust.

Hey everyone,

Have you ever heard of an LRU cache? It’s a type of cache that stores data temporarily in order to improve the performance of a system. Recently, the team at Discord made the decision to switch the language that they use to implement their LRU cache from Go to Rust. In this blog, I’m going to explain why they made this decision and how it will impact the performance of Discord.

First of all, let’s talk about why Discord uses an LRU cache in the first place. Essentially, The LRU (Least Recently Used) cache is a system that stores data temporarily in order to improve the overall performance of the platform. The LRU cache stores frequently accessed data in memory so that it can be quickly retrieved when needed. This helps to reduce the amount of time and resources required to access data, which can significantly improve the performance of the system.

One of the main reasons that Discord uses an LRU cache is to handle the large amounts of data that are being processed by the platform. With millions of users sending messages and using various features, there is a constant stream of data being processed by the system. By using an LRU cache, Discord is able to store this data in memory and quickly retrieve it when needed, which helps to reduce the workload on the system and improve its overall performance.

Security POV: In addition to improving performance, the LRU cache also helps to improve the security of the system. By storing data in memory, it can be more difficult for malicious actors to access sensitive information. This is especially important for a platform like Discord, which is used by millions of people for communication and collaboration.

In short, the LRU cache is an important feature for Discord, as it helps to improve the performance and security of the platform. By using an LRU cache, Discord is able to handle the large amounts of data being processed by the system and provide a smooth and efficient experience for its users.

Read State Service

Why discord switched from Go to Rust_ - YouTube.png)

Here, the read state service is a feature that allows users to see which messages they have read in a particular channel or conversation. This is particularly useful in large group conversations where there may be many messages being sent at once. By tracking the read state of messages, users can easily keep track of which messages they have already seen and which ones they may have missed.

To implement this feature, Discord stores data about the read state of each message in a database. This data is then used to update the display of messages in the user interface, showing which ones have been read and which ones have not.

The read state service can be particularly useful for users who are part of large communities or teams, as it allows them to easily keep track of important conversations and ensure that they do not miss any important updates or information. It is also a useful tool for moderation, as it can help community managers to keep track of which messages have been seen by users and which ones may require further attention or action.

The Issue

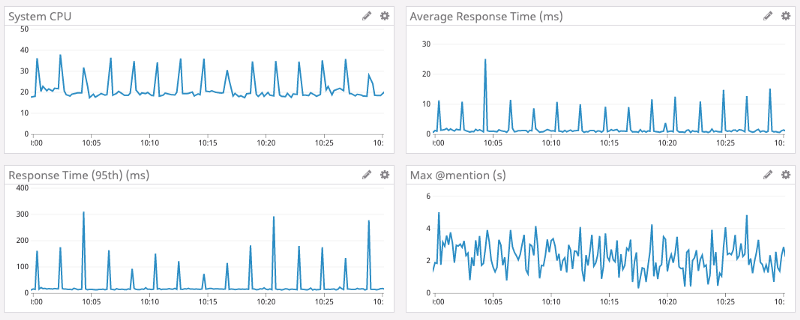

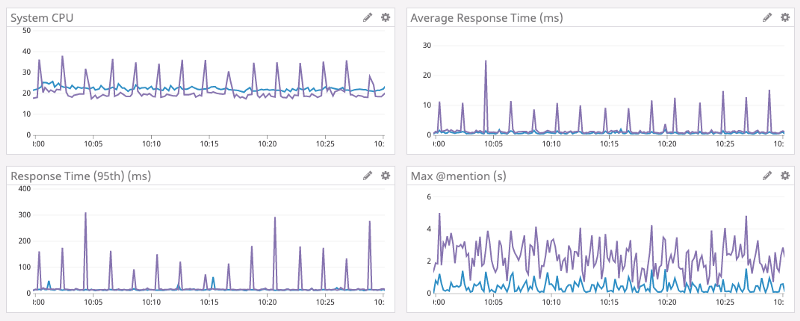

So why did Discord decide to switch the language they use to implement their LRU cache? One of the main reasons was that they were experiencing a CPU spike every two minutes or so. This meant that the system was using a lot of resources and was running slower than usual. After investigating the issue, the Discord team realized that the Go language they were using for their LRU cache was not efficient enough to handle the large amounts of data being processed by the system. This was causing the CPU spike and was impacting the overall performance of the platform.

To solve this issue, the Discord team decided to switch the language they use for their LRU cache from Go to Rust. Rust is a more memory-safe and efficient language than Go, which makes it better suited for handling large amounts of data. One of the main reasons for this is Rust’s ownership system, which helps to prevent common memory errors such as null or dangling pointer references.

What’s this OwnerShip System

Why discord switched from Go to Rust_ - YouTube.png)

The Rust ownership system is a unique feature of the Rust programming language that helps to prevent common memory errors such as null or dangling pointer references. It works by assigning ownership of a piece of data to a specific variable. This variable is then responsible for the lifetime of that data, and once it goes out of scope, the data is automatically deallocated.

This is in contrast to the garbage collector used in languages like Go. A garbage collector is a system that automatically deallocates memory that is no longer being used. This can be useful for simplifying memory management and reducing the overhead associated with it. However, garbage collectors can also introduce overhead of their own, as they have to constantly scan the memory to determine which data is no longer being used. This can impact the overall performance of the system.

To be precise, the Rust ownership system offers a more efficient and memory-safe method for managing memory compared to a garbage collector. It allows developers to explicitly control the lifetime of their data and ensures that memory is automatically deallocated when it is no longer needed. This can help to improve the overall performance and security of the system.

Problem Solved

the Rust ownership system assigns ownership of a piece of data to a specific variable, which is then responsible for the lifetime of that data. This means that data is automatically deallocated when it is no longer needed, which can help to reduce the overhead associated with memory management.

In contrast, languages like Go use a garbage collector to manage memory. While a garbage collector can be useful for simplifying memory management, it can also introduce overhead as it constantly scans the memory to determine which data is no longer being used. This can impact the overall performance of the system and contribute to issues such as a CPU spike.

By using the Rust ownership system instead of a garbage collector, Discord was able to improve the efficiency of its memory management and reduce the overhead associated with it. This helped to solve the problem of the CPU spike and improve the overall performance of the platform. The Rust ownership system is a powerful tool for improving the efficiency and security of a system, and it was a key factor in Discord’s decision to switch to Rust for its LRU cache. By using Rust, Discord was able to improve the performance of its platform and provide a better experience for its users.

Looking Around

When thinking to switch the language, I have few other options to consider. One option is C++, which is a popular language for high-performance systems due to its fast runtime and low-level control. However, C++ is also more prone to memory errors than Rust, which could have made it a less attractive option for Discord Developers.

Another option is C#, which is a popular language for building applications on the .NET platform. C# is known for its strong type system and high-level abstractions, which can make it easier to write code that is both correct and maintainable. However, C# is not as efficient as Rust when it comes to runtime performance and memory management, which could have made it a less appealing choice for Discord.

Wrapping Up

So there you have it! That’s why Discord recently switched the language they use to implement their LRU cache from Go to Rust. By making this switch, they are positioning themselves to better handle the increasing demand for their platform, ensuring that their users can continue to communicate effectively and without interruption.

Give applause if you liked it!

Image Credits: CoreDump and Discord Blog